At times it can feel like information overload when it comes to the nebulous concept of AI in the law — and especially in ediscovery. Despite the fact that we are drowning in a sea of data and bombarded by AI hype, there does not seem to be much consensus on how real and beneficial AI in legal tech currently is. Nor is there clarity on what the best practices are for effectively and defensibly deploying AI-powered technology in your ediscovery and managed review workflow.

Today, the use of AI-powered technology in ediscovery poses more questions than answers. So, do we believe the hype and jump into using ediscovery with both feet or take a more cautious approach? What do you do if you are using an AI-powered tool and the result is, shall we say, unexpected? Are there industry-validated methods, workflows or approaches that practitioners can deploy to improve the accuracy and defensibility of their AI workflows? And what pitfalls should people be aware of as they embark on an AI deployment?

Before you start hyperventilating and run for the hills, let’s unpack these concerns, separate the hype from reality, and walk through some best practices to improve the deployment of AI powered solutions in your ediscovery practice. First, let’s clarify what AI can and cannot do for legal practitioners.

Expectations vs. Reality

Before evaluating concerns around AI in ediscovery, it is helpful to level set about what AI currently does and does not do for legal practitioners. For this article, we will define “legal AI” as a suite of advanced machine learning, data visualization, and AI-powered tools deployed in concert to augment and amplify legal practitioners’ insights and decision making.

AI is not an easy button and cannot magically generate amazing time and cost savings in the absence of knowledgeable human guidance. Nor is it a glorious machine that can make up for bad data inputs, poor workflow management, or inept review. It can, however, amplify and better inform human decision making thereby drastically reducing time to evidence and insight on a matter.

Expectation: It’s Skynet

Science fiction and Arnold Schwarzenegger primed us for sentient robot overlords with cognitive capabilities far outpacing humans. When you are expecting something akin to Skynet, the current state of AI may seem like a letdown — but this does not mean that you are not seeing AI at work. Rather, it is a question of having appropriate expectations grounded in the current state of technical capability. It is important to understand that these Hollywood depictions are aspirational representations of what a much more advanced AI could look like, and not the current state of AI.

Expectation: AI is new and scary

Rather than being novel and unproven, the technology underpinning legal AI is based on established and validated concepts and technology dating from the ‘50s. From retail to finance, big pharma to media, AI is broadly deployed and integrated in a much more meaningful way in many industries. One needs to look no further than their cell phone to see the broad-reaching impact of AI in daily life beyond what is in law (think Netflix queue, Facebook’s people you may know, geolocations tools, and Google’s predictive search queries to name a few).

Expectation: Legal AI is not real AI (or is it?)

Depending on who you speak with, it is debatable as to whether what legal technologists are calling AI meets the true definition of AI. While that may be up for debate, it is true that the legal industry at large lags significantly behind many others.

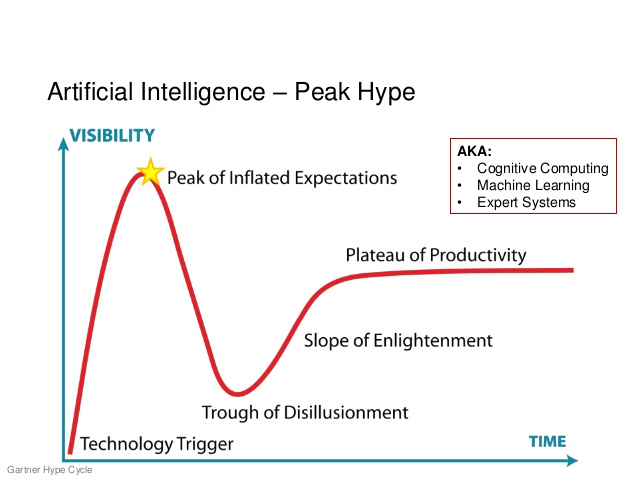

Gartner hype curve for AI (via Wikipedia)

But I thought legal AI was my easy button!

Legal AI is an amazing step in the right direction — but no matter how advanced it becomes, the algorithm cannot think for you. The saying “garbage in, garbage out” is especially apt in the case of legal AI. Whether you are using a continuous learning model or more basic machine learning technology, it is imperative that practitioners deploy a sound workflow and subjective human reasoning to ensure that legal AI is effective. High-level, that means methods should be statistically defensible, be deployed within a manageable scope, and provide insight beyond simple search terms.

The process of training and supervising AI should not be truncated in order to see the full benefits. A statistically defensible process requires making sound decisions about sampling the data. Getting meaningful insights requires tracking what the AI is being taught and making sure that it doesn’t learn bad habits from its teachers. Furthermore, AI is only trained to one specific purpose — it isn’t a panacea that cures all ediscovery woes. AI enables us to find responsive content, but it doesn’t magically make document review disappear.

Exploring the realities of AI

Given these more realistic expectations of legal AI, the next step is digging into what legal AI is and what models are currently being deployed.